Very interesting day in Ottawa yesterday preparing for the CFTPA presentation to the CRTC today. Lots of involved conversation with extremely intelligent individuals… I’m coming to the startling realization that this “government” of ours actually entails a lot of hard work. Who knew?

Having probably read, spoke, and thought more about the myriad aspects of this net neutrality hearing in the last week than I ever have in my life (and likely, more than is probably healthy) I thought it would be an interesting time to do a little follow up to the series of posts I’ve written following this issue, primarily on why the average end-user, with little interest in public policy should care.

The problem that the Net Neutrality “movement” has is somewhat similar to the issue faced by the ubiquitous WTO protesters – everyone’s in it for a different reason and for completely different politics. For every libertarian who proposes Net Neutrality to guarantee their freedom of net access – another decries any non-market intervention in industry. For every network engineer desperate to keep blanket traffic shaping off their protocols – there’s another that could argue legislation would limit the ability to improve end-user service quality.

I think my viewpoint boils down to this: The majority of these hearings Globally (and the Canadian proceedings specifically) have centered around traffic management of BitTorrent. As a content producer I have a mixed relationship with BitTorrent. I have used it as a legal, valuable, distribution tool – and I have seen it used to pirate works that cost me money (that’s not an abstract “piracy costs the industry billions of dollars” which I still believe is mostly distracting nonsense, that’s a concrete comment at a torrent tracker that was essentially “thanks, I was just about to go buy this on-line”). But BitTorrent is nothing if not a giant red herring. Gopher, Usenet, zero-day websites, kazaa, napster, limewire, WinNY, Tor… all are, essentially, placeholders for “any technology”.

BitTorrent is only particularly interesting in this instance because it has two distinct characteristics:

- It has certain “P2P” tendencies that make it difficult to manage on a network

- It is popular

Everything else (for the purpose of Net Neutrality) is distracting chaff.

Well guess what? Pretty much any technology that gets introduced from this point forward will have “P2P tendencies that (will) make it difficult to manage on a network”. World of Warcraft has P2P tendencies now. New VoIP applications have P2P tendencies. Flash (one of the widest technologies in use worldwide) is starting to adopt P2P tendencies. So really the only thing that makes BitTorrent particularly unique at this point in time is that it’s popular. And is that the precedent that we are willing to set? When a technology is widely adopted at a level not conceived of in an original network design the optimum management technique is to strangle it? I heard a great line today (and I haven’t asked for permission so I won’t attribute it) that if we had judged YouTube’s potential on what it was in 2005 (crotch kicks and cat videos) it never would have become such a platform for independent content and political discourse (and, of course, high def crotch kicks, and cats playing piano).

Maybe I, personally, wouldn’t be entirely heartbroken if BitTorrent was throttled out of usefulness… but what about when the next “popular” but “difficult” application is YouTube, or iTunes, or Skype, or my independent video distribution service? How technologies are used change. What technologies we use change. If how we respond to those technologies is to be consistent, we need to make sure they will consistently foster a future we feel is worth working for – not kill that goose before it lays any egg – let alone a golden one.

I can’t see a future of exciting new development opportunities fostered on a network where content judgements of any stripe is allowed ISPs who have their own content interests. That’s not a slight on their character, nor a suggestion of impropriety; Rather it would be improper if they didn’t use that leverage to prioritize their own vision of the future. That’s how the future is built – battling self-interests. But I do think (or hope) that there are more people self-interested in a future with an even playing field that they can build on.

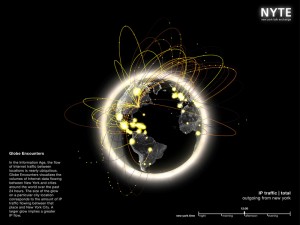

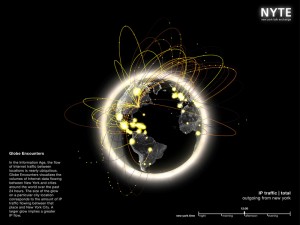

When people ask me why I get so revved up about technology – I generally talk about how I am now able to do things that I couldn’t have imagined when I first logged on to the “Internet” fifteen years ago. Not only things that, literally, would have seemed like magic – but I can tell different stories, to different people, in ways that would have, quite literally, seemed like science fiction. I would like to live in a world where the next fifteen years will be equally as vibrant, creative, and revolutionary to how we – as humanity – tell stories to each other.

![By David Crow; Pawyilee [Public domain], via Wikimedia Commons Oh Wikimedia Commons, is there any topic you don't have the perfect image for?](http://www.bradfox.com/blog/wp-content/uploads/2011/03/Elephant_crossing.jpg)